Introduction

Data scientists often overstate the certainty of their predictions. I have had engineers laugh at my point predictions and point out several types of errors in my model that create uncertainty. Prediction intervals are an excellent counterbalance for communicating the uncertainty of predictions.

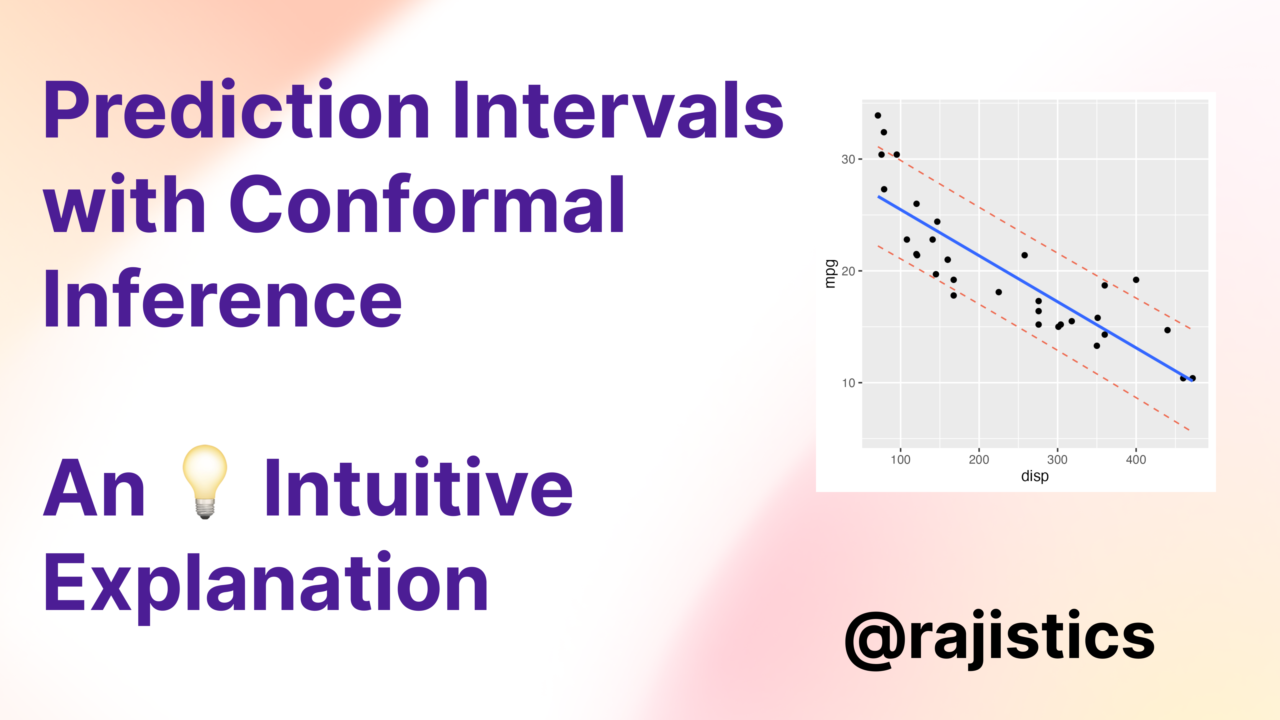

Conformal inference offers a model agnostic technique for prediction intervals. It’s well known within statistics but not as well established in machine learning. This post focuses on a straightforward conformal inference technique, but there are more sophisticated techniques that provide more adaptable prediction intervals.

I have created a Colab 📓 companion notebook at https://bit.ly/raj_conf, and the Youtube 🎥 video that provides a detailed explanation. This explanation is a toy example to learn how conformal inference works. Typical applications will use a more sophisticated methodology along with implementations found within the resources below.

For python folks, a great package to start using conformal inference is MAPIE - Model Agnostic Prediction Interval Estimator. It works for tabular and time series problems.

Further Resources:

Quick intro to conformal prediction using MAPIE in medium

A Gentle Introduction to Conformal Prediction and Distribution-Free Uncertainty Quantification, paper link

Awesome Conformal Prediction (lots of resources)