05 Apr 2016

Ever since I ran across RNNs, they have intrigued me with their ability to learn. The best background is Denny Britz’s tutorial, Karpathy’s totally accessible and fun post on character-level language models, and Colah’s detailed descriptions of LSTMs. Besides all the fun examples of generating content with RNNs, other people have been applying them and winning Kaggle competitions and the ECML/PKDD challenge.

I am still blown away by how RNN’s can learn to add. RNNs are trained through thousands of examples and can learn how to sum numbers. For example, the Keras addition example show how to add two sets of numbers up to 5 digital long each (e.g., 54678 + 78967). It achieves 99% train/test accuracy in 30 epochs with a one layer LSTM (128 HN) and 550k training examples.

My eventual goal is to use RNNs to study various sequenced data (such as the NBA SportVu), so I thought I should start simple. I wanted to teach a RNN to add a series of numbers. For example: 5+7+9. The rest of the post discusses this journey.

1st Grade Model

My first model was teaching an RNN to add between 5 to 15 single digit numbers. This would be at the level of a first grader in the US. For example, using a 2 layer LSTM network with 100 hidden units, a batch of 50 training examples, and 5000 epochs, the RNN summed up:

8+6+4+4+0+9+1+1+7+3+9+2+8 as 66.2154007

This isn’t too far from the actual answer of 62. The Keras addition example show that with even more examples/training, the RNN can get much better. The code for this RNN is available as a gist using tensorflow. I made this in a notebook format so its easy to play with.

There are lots of parameters to tweak with RNN models, such as the number of hidden units, epochs, batch size, dropout, and training rate. Each of these has different sorts of effects on the model. For example, increasing the number of hidden units will provide more space for learning, but consequently take longer to learn/train. The chart below shows the effect of different choices. Please take the time to really study/investigate the role of hidden units. Its a dynamic plot so you can zoom in and examine each series individually by clicking on the legend.

02 Apr 2016

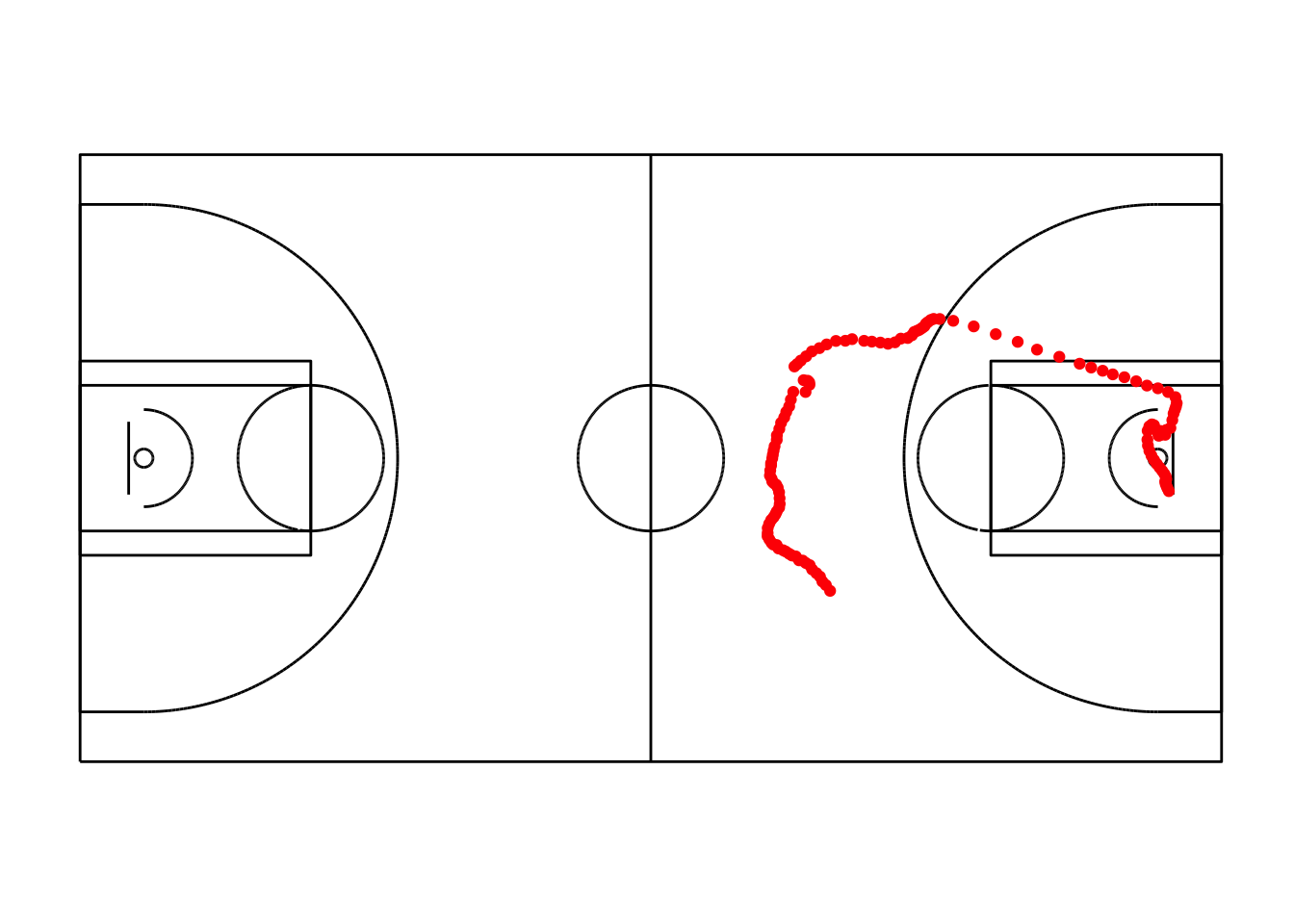

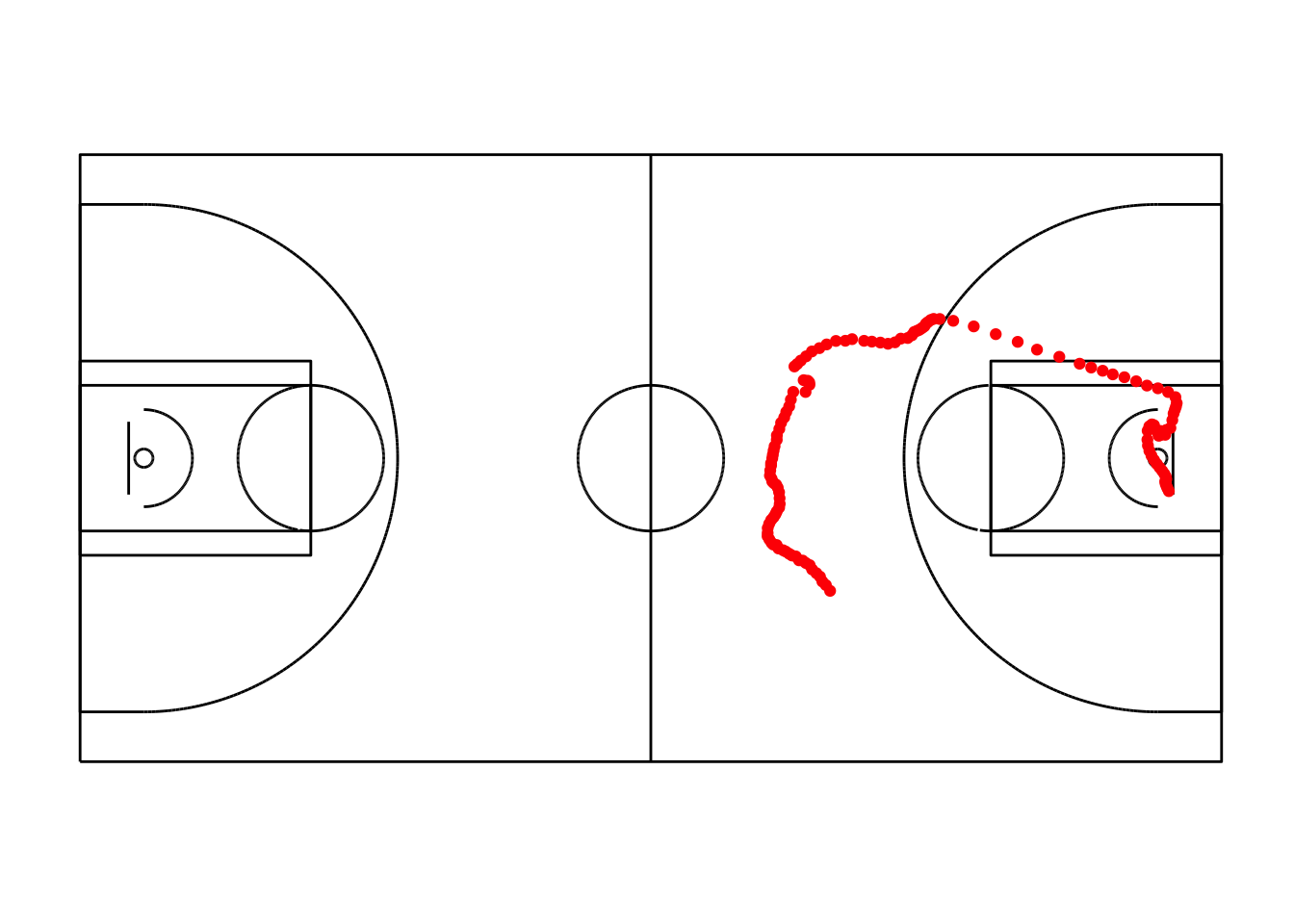

This post shares some of the code that I have created for analyzing NBA SportVu data. For background, the NBA SportVu data is motion data for the basketball and players taken 25 times a second. For a typical NBA game, this means about 2 million rows of data. The data for over 600 NBA games (first half of the 2015-2016 season) is available. This is over a billion rows of telematics (iOT) type data. This is a gold mine and here are some early pieces from studying that data.

The first is basic EDA on the movement data. This code allows you to start analyzing the ball and player movement.

The next markdown, PBP, shows how to merge play by play data with the SportVu movement data. This allows using the annotated data which contains information on the type of play, score, and home/visitor info.

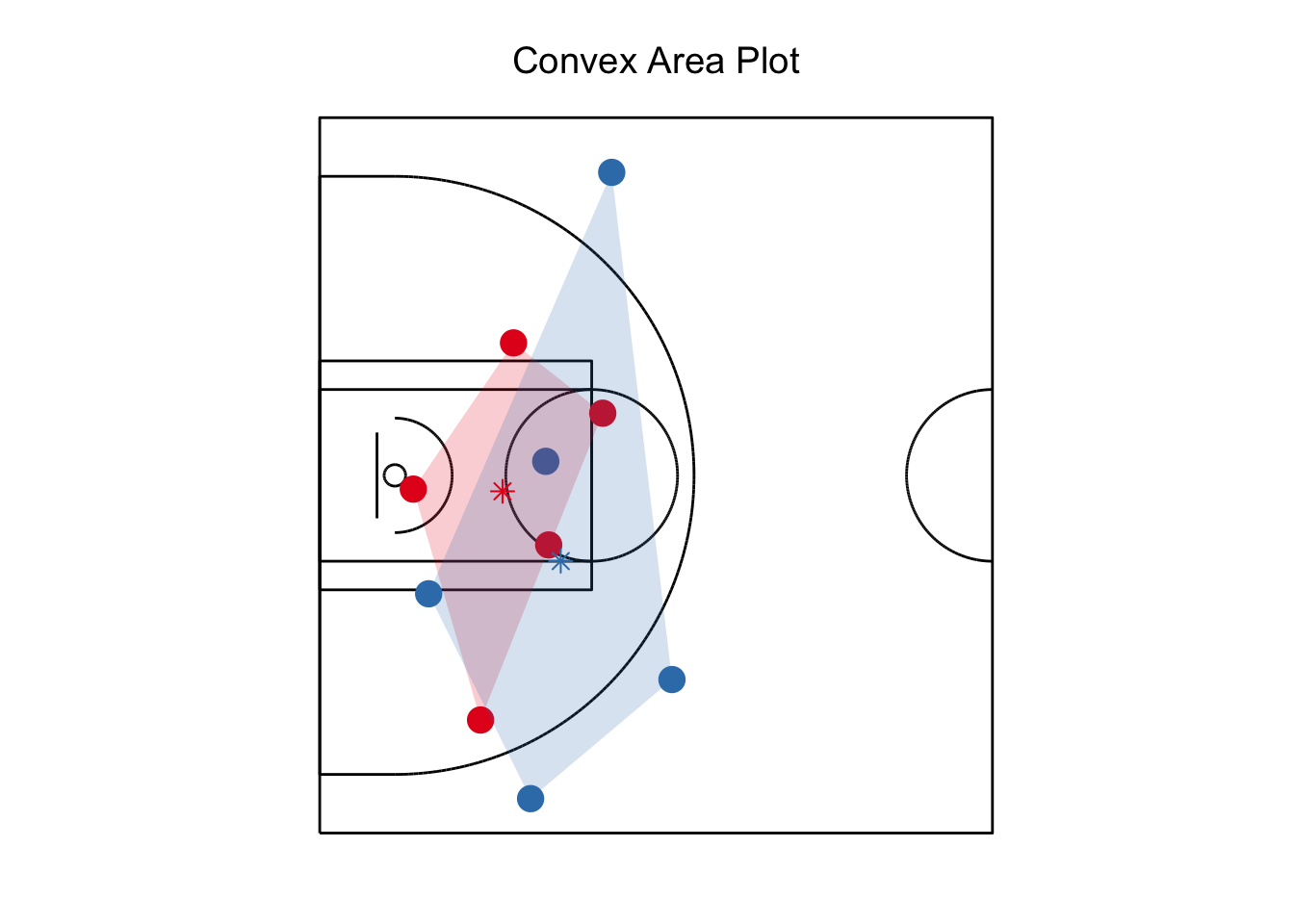

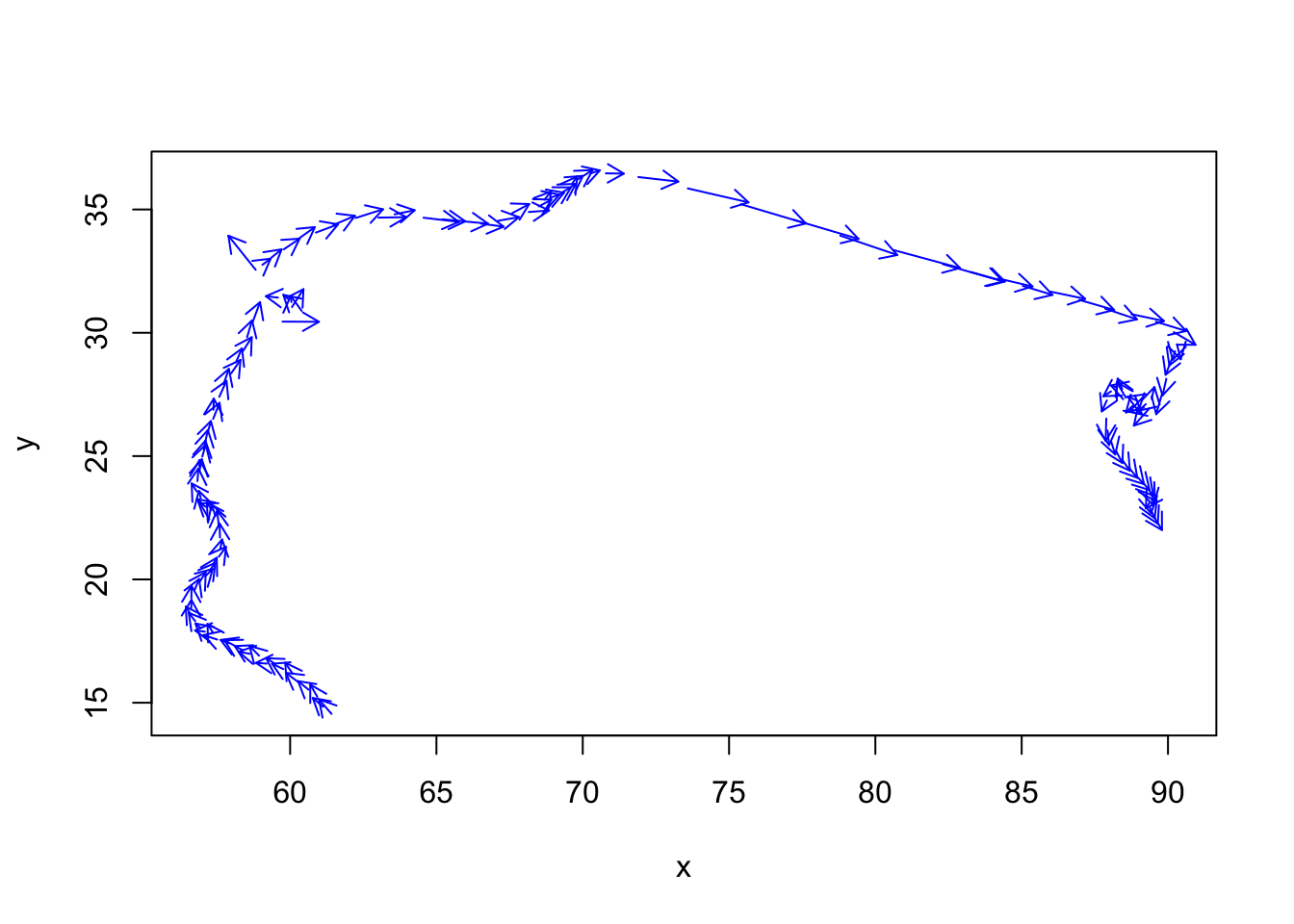

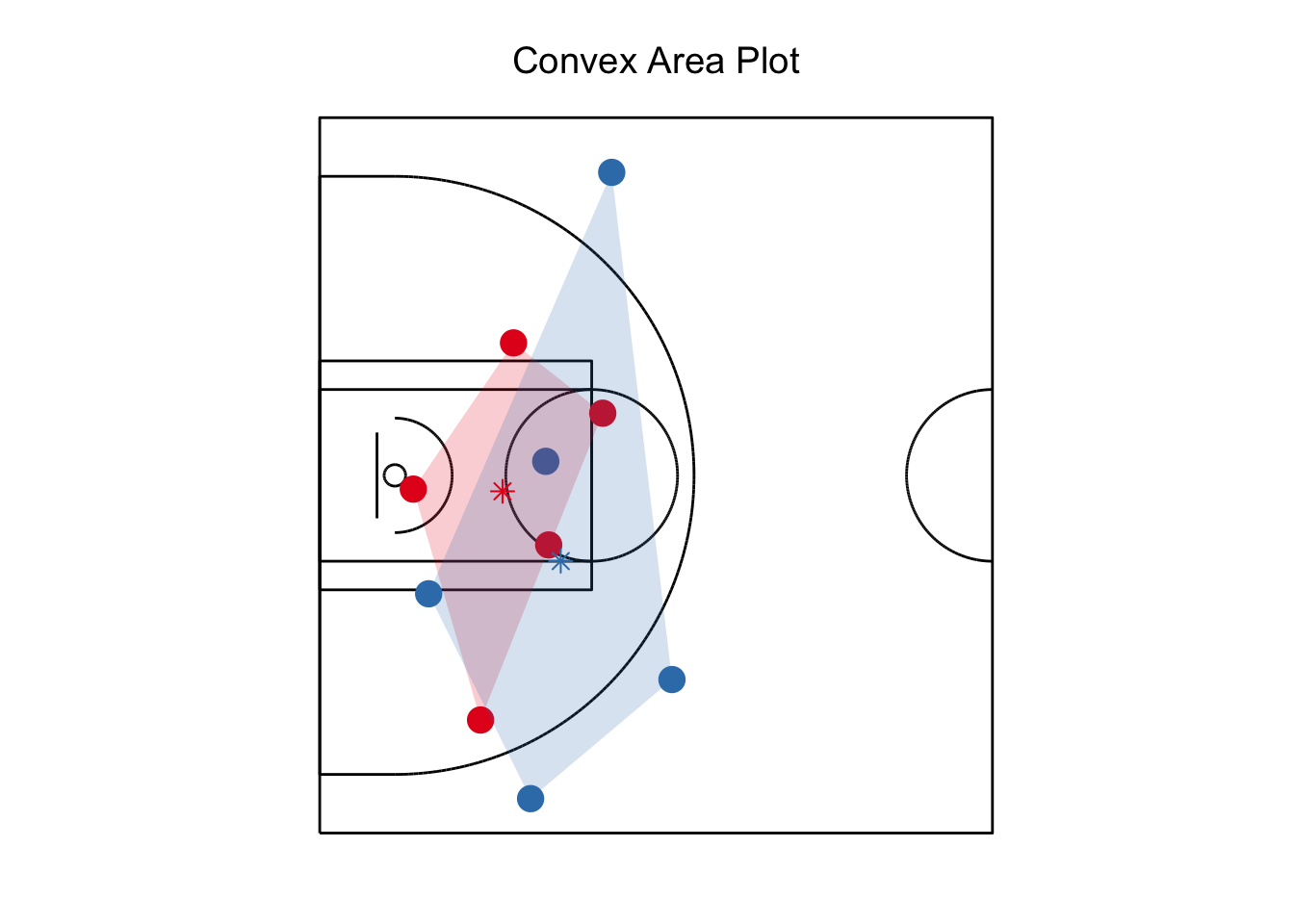

The next set of documents start analyzing the data. The first measures player spacing using convex hulls. The next shows how to calculate player velocity, acceleration, and jerk. (I really wanted to do a post on the biggest jerk in the NBA, but unfortunately the jerk data is way too noisy.)

The next set of documents start analyzing the data. The first measures player spacing using convex hulls. The next shows how to calculate player velocity, acceleration, and jerk. (I really wanted to do a post on the biggest jerk in the NBA, but unfortunately the jerk data is way too noisy.)

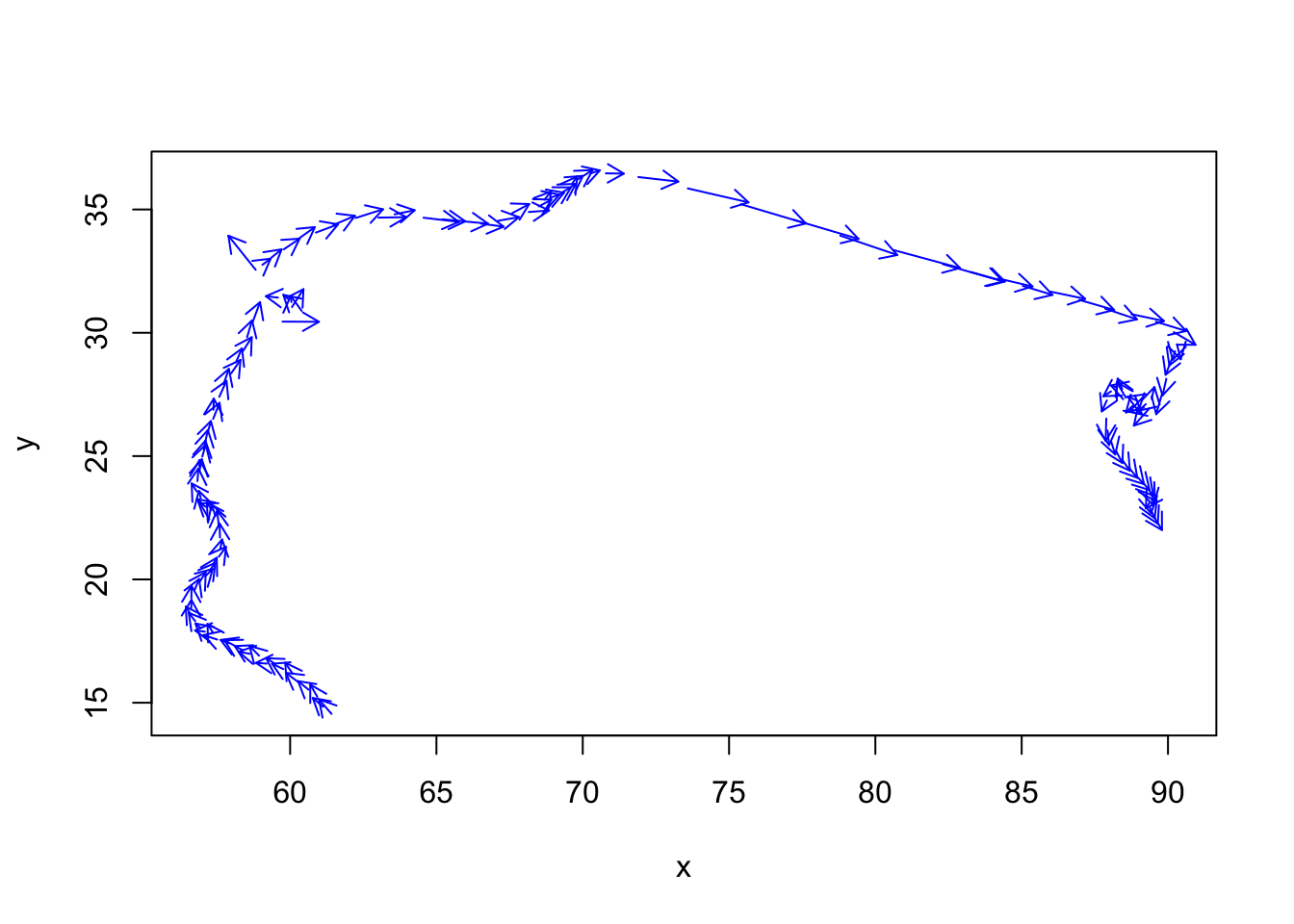

The third document offers a few different ways for analyzing player and ball trajectories.

The third document offers a few different ways for analyzing player and ball trajectories.

You can find all these files at my SportVu Github repo.

01 Apr 2016

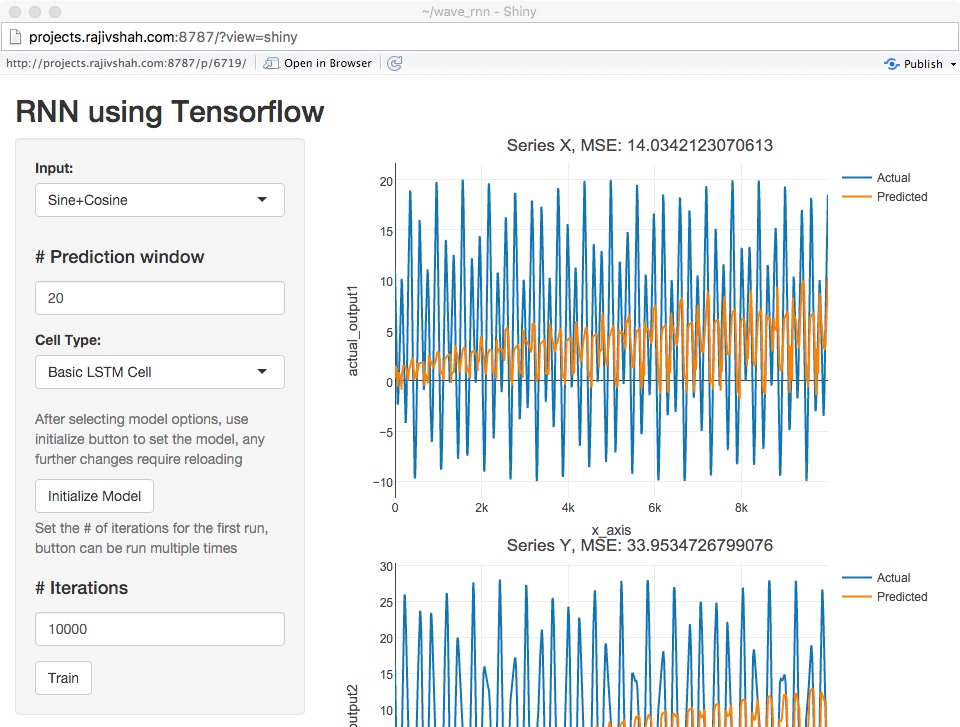

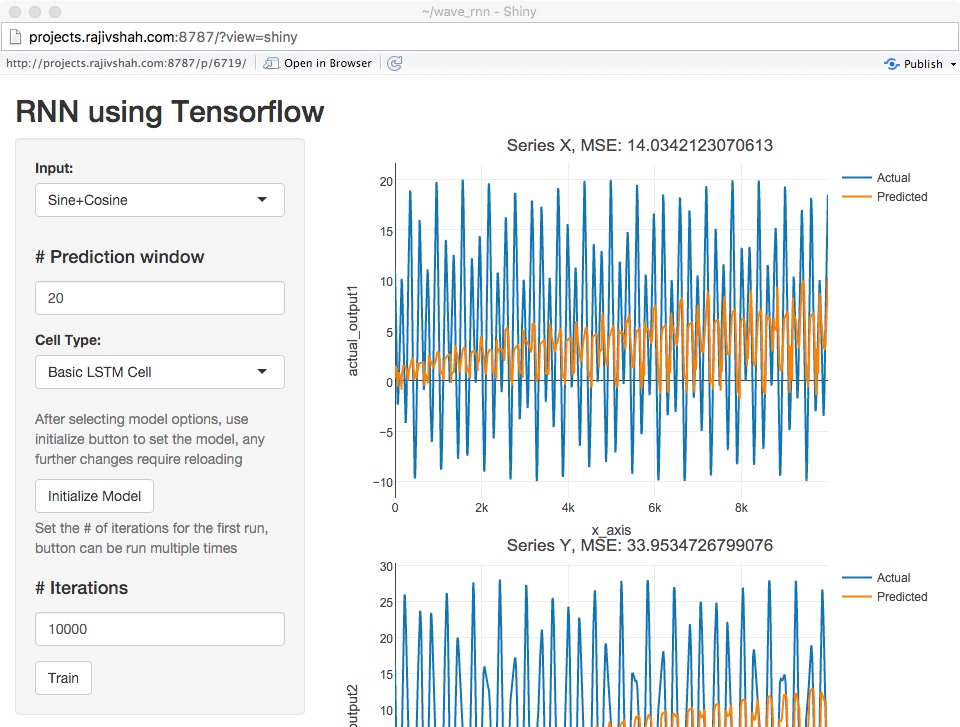

I built a GUI front end for tensorflow from shiny, the code is available at Github. The shiny app allows trying different inputs, RNN cell types, and even optimizers. The results are shown with plots as well as a link to tensorboard. The app allows anyone to try out these models with a variety of modelling options.

The code for the shiny web app was based around work by Sachin Jogleka. Sachin focused on RNNs that had two numeric inputs. (This is slightly different than most RNN examples which focus on language models.)

Sachin’s code was modified to allow different cell types and reworked so it could be called from rPython. The shiny web app relies on rPython to run the tensorflow models. There is also an iPython notebook in the repository if you would like to test this outside of shiny.

Live Demo:

I have a live demo of this app, but it’s flaky. Building RNN models is computationally intensive and the shiny front end is intended to be used on development boxes with tensorflow. My live demo app is limited in several ways. First, the server lacks the horsepower to build models quickly. Second, if the instructions below are not carefully followed the app will crash. Third, its not designed for multiple people building different types of models at the same time. Finally, tensorboard application sometimes stops running, so the link to tensorboard within the live demo app may not work. Again, to really use this app, please install it locally.

The requirements for the app include tensorflow and numpy on the Python side. Shiny, Metrics, plotly, and rPython on the R side. rPython can be difficult to install/configure, so please verify that rPython is working correctly if you are having problems running the code.

Using the App:

To use the app, select your model options. For the inputs, there are three options of increasing complexity. Steps for prediction window refers to how far ahead is the model suppose to predict. For this data, 20s seemed a reasonable window. For Cell Type, select one of the cell types and press Initialize Model. Then select iterations (max of 10,000) and press Train. After a few seconds, you will see the output.

Take advantage of the plots to zoom in and out and see the shape of the actual and predicted outputs. To further improve the model, you can add iterations by pressing the train button. The plots show how the RNN model is learning and getting better at predicting the output.

To try a new model, select a new cell type and press initialize model. Then select the number of iterations and press train.

If the app crashes, no worries, it happens. I have not accounted for everything that could go wrong.

The next set of documents start analyzing the data. The first measures player spacing using

The next set of documents start analyzing the data. The first measures player spacing using  The third document offers a few different ways for analyzing

The third document offers a few different ways for analyzing