Introduction

This post covers 3 easy-to-use 📦 packages to get started. You can also check out the Colab 📓 companion notebook at https://bit.ly/raj_explain and the Youtube 🎥 video for a deeper treatment.

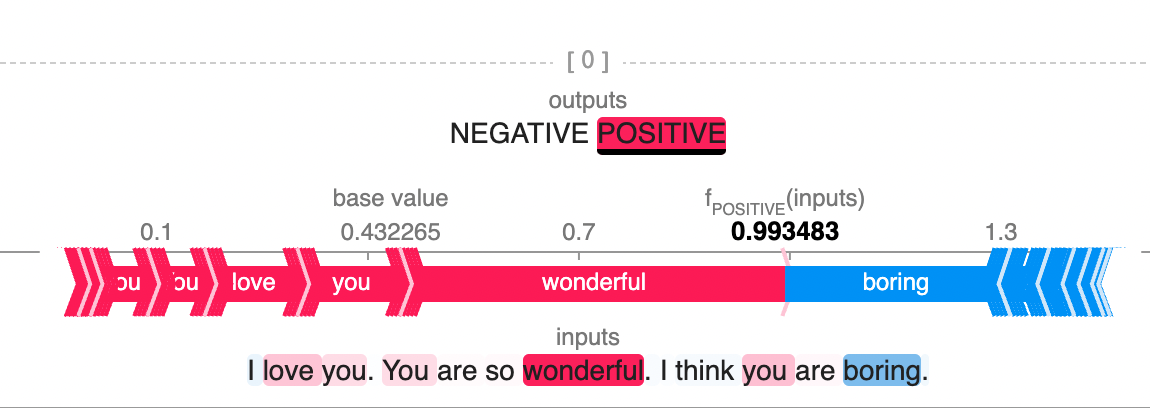

Explanations are useful for explaining predictions. In the case of text, they highlight how the text influenced the prediction. They are helpful for 🩺 diagnosing model issues, 👀 showing stakeholders understand how a model is working, and 🧑⚖️ meeting regulatory requirements. Here is an explanation 👇 using shap. For more on explanations, check out the explanations in machine learning video.

Let’s review 3 packages you can use to get explanations. All of these work with transformers, provide visualizations, and only require a few lines of code.

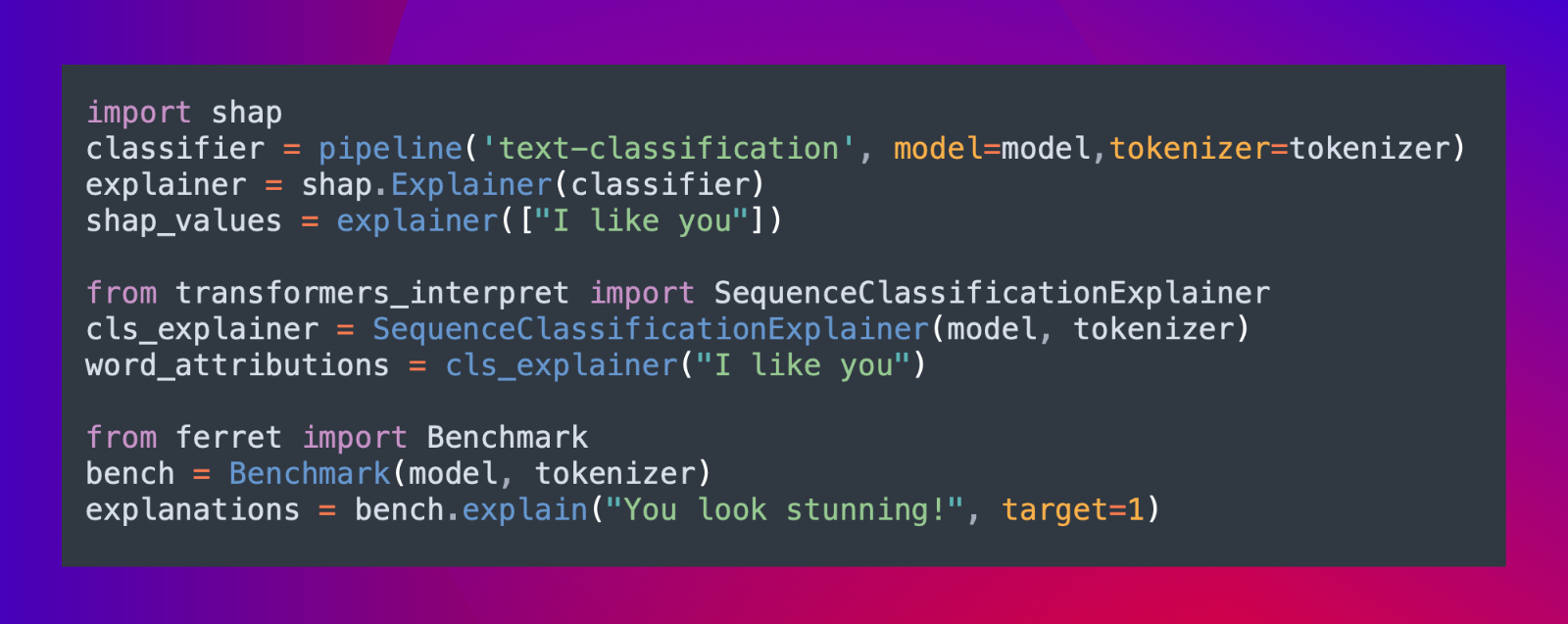

Shap

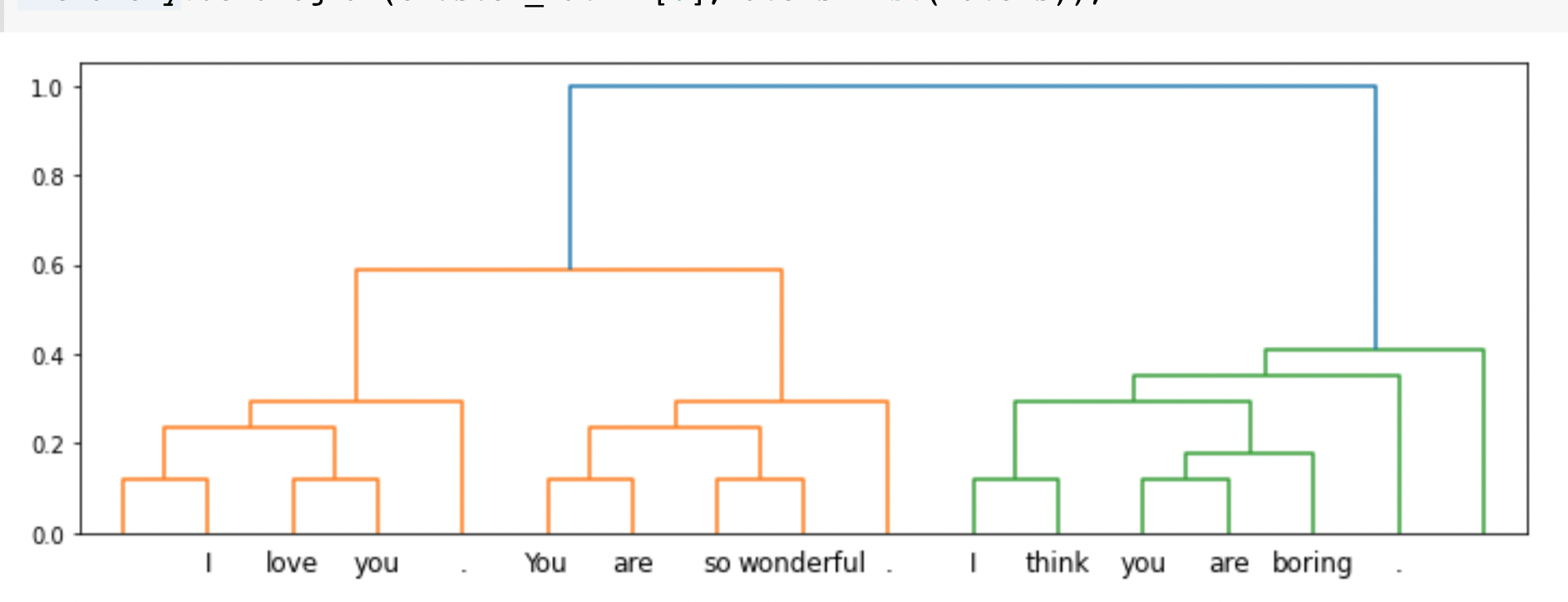

- SHAP is a well-known, well-regarded, and robust package for explanations. In working with text, SHAP typically defers to using a Partition Shap explainer. This method makes the shap computation tractable by using hierarchical clustering and Owens values. The image here shows the clustering for a simple phrase. If you want to learn more about Shapley values, I have a video on shapley values and a deep dive on Partition Shap explainer is here.

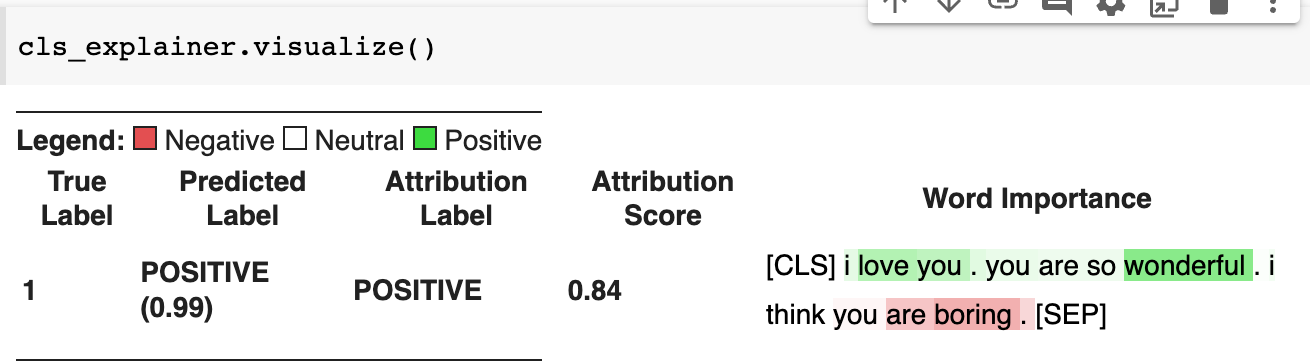

Transformers Interpret

- Transformers Interpret uses Integrated Gradients from Captum to calculate the explanations. This approach is 🐇 quicker than shap! Check out this space to see a demo.

Ferret

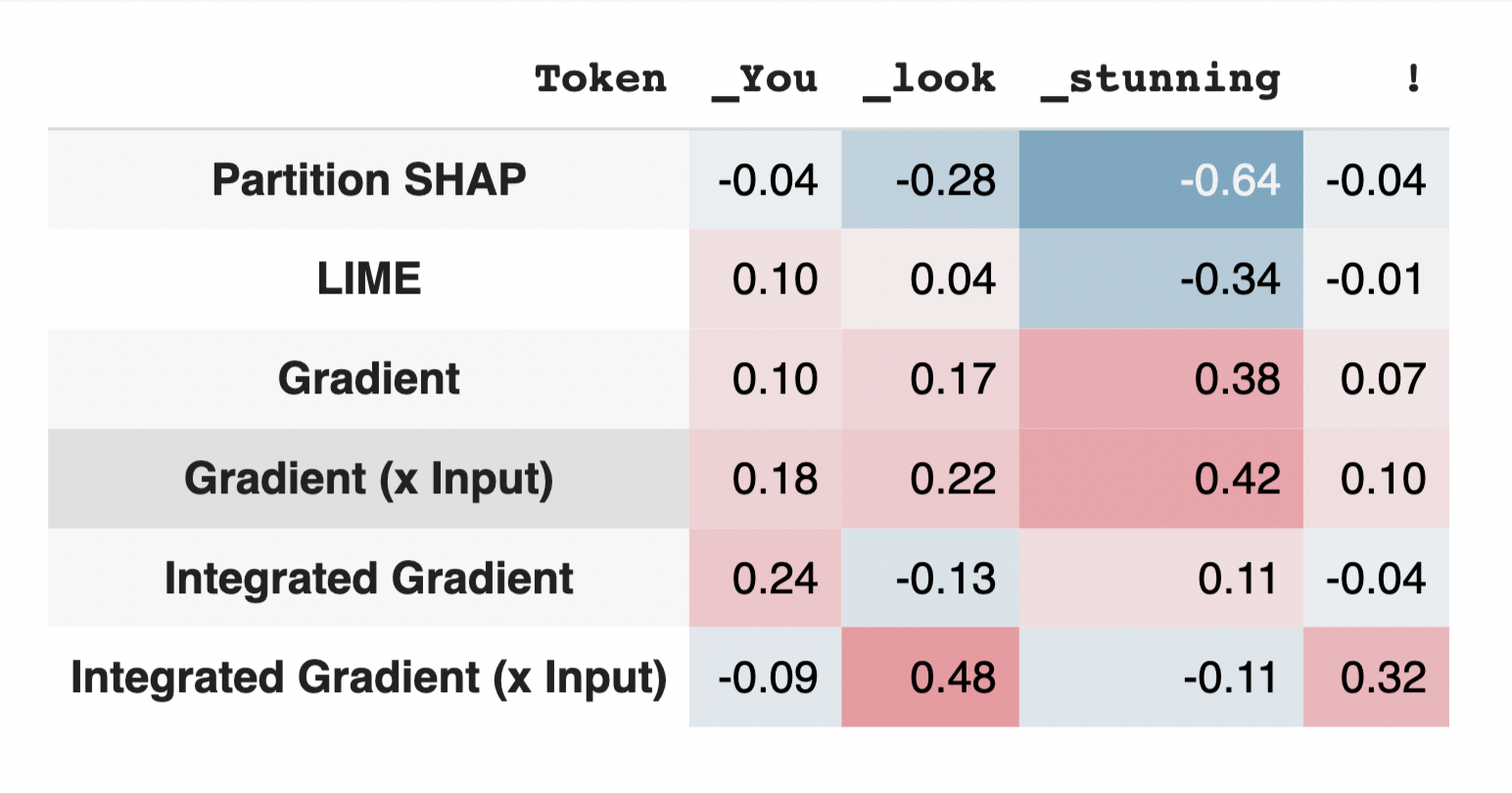

Ferret is built for benchmarking interpretability techniques and includes multiple explanation methodologies (including Partition Shap and Integrated Gradients). A spaces demo for ferret is here along with a paper that explains the various metrics incorporated in ferret.

You can see below how explanations can differ when using different explanation methods. A great reminder that explanations for text are complicated and need to be appropriately caveated.

Screen Shot 2022-08-11 at 1.19.05 PM Ready to dive in? 🟢

For a longer walkthrough of all the 📦 packages with code snippets, web-based demos, and links to documentation/papers, check out:

👉 Colab notebook: https://bit.ly/raj_explain

🎥 https://youtu.be/j6WbCS0GLuY